Flutter Voice Recognition- Speech To Text - Voice Based Search

Implement flutter voice recognition example. We will search the items with Voice recognition. Convert voice to text and implement search in the listview

Hello guys, in this post we are going to learn how to take voice input in flutter

In android we have SpeachToTextRecognizer, how we will achieve this in flutter

We have speech_recognition plugin, with this we will implement the functionality

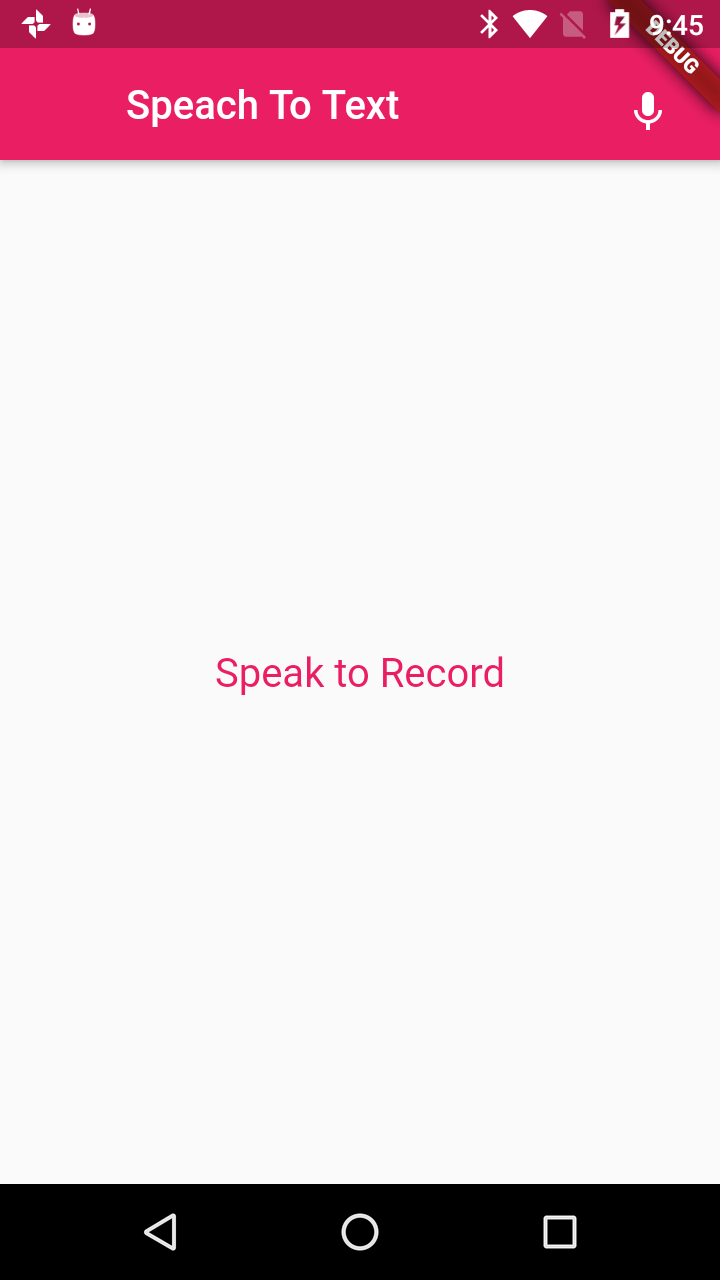

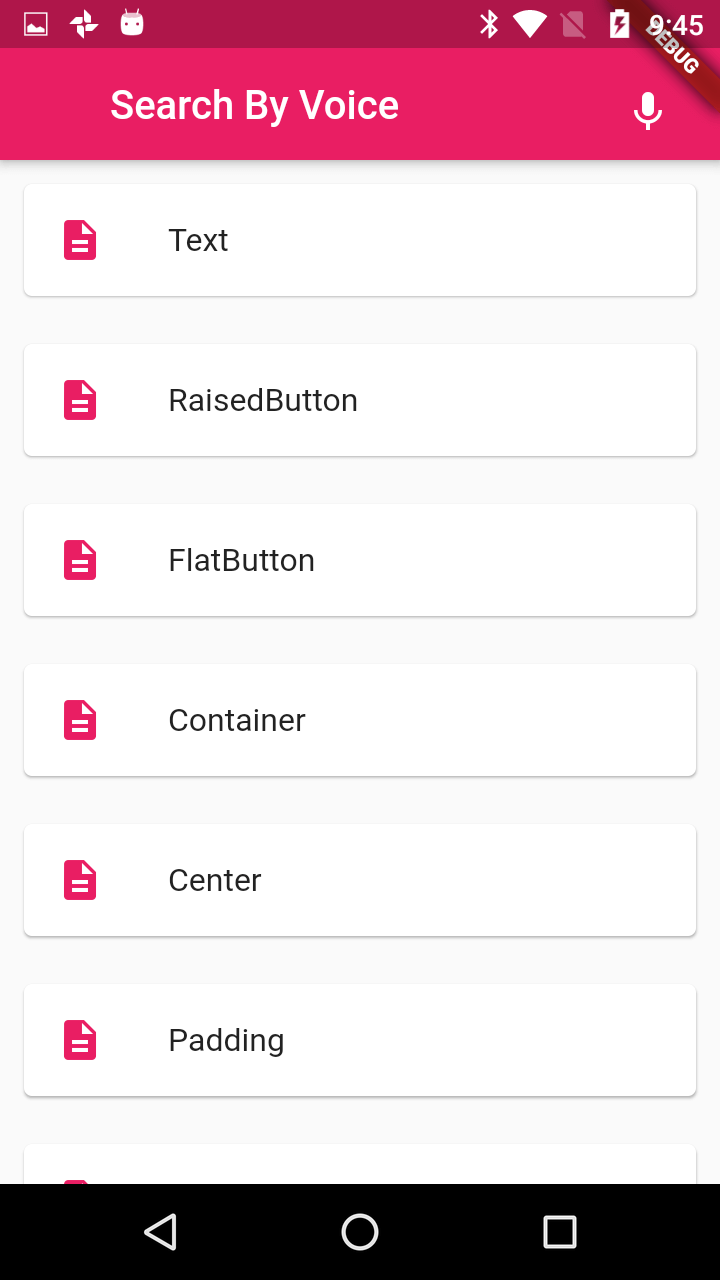

In This I am going to show the 2 examples

- Simple VoiceRecognizer

- Voice base Search

Let's start

Step 1:

Create Flutter Application

Step 2:

pubspec.yaml

Add dependencies in pubspeck.yaml file

|

speech_recognition: ^0.3.0+1 |

To handle Android and iOS specific permissions we need to add permissions plugin

|

permission_handler: ^4.2.0 |

Step 3:

Create UI

Now we are going to create a mic button to start Speach Recognizer

|

Widget _buildVoiceInput({String label, VoidCallback onPressed}){ |

Step 4:

Handle Permissions

To Voice Recognizer we need to add few permission in Platform specific

Android

|

|

add these permissions in manifest file

iOS

|

Key: Privacy - Microphone Usage Description. |

Add these lines in Plist file

Now we need to hanlde this permission request, we are going to hanlde this by below code

|

void requestPermission() async { if (permission != PermissionStatus.granted) { |

Step 5:

Now it's time to create SpeechRecognition

Create instance of SpeechRecognition

_speech = new SpeechRecognition();

Check SpeechRecognition availability in the device by

|

_speech.setAvailabilityHandler((result){ |

Set Current Location by

|

_speech.setCurrentLocaleHandler() |

To Hanlde the SpeachToTextRecognizer Start by

|

_speech.setRecognitionStartedHandler() |

Hanlde the SpeachToTextRecognizer Result by

|

_speech.setRecognitionResultHandler() |

To Hanlde SpeachToTextRecognizer Completion Listner by

|

_speech.setRecognitionCompleteHandler() |

Activate SpeachToTextRecognizer by

|

_speech.activate() |

Step 6:

Now its time to start and stop SpeachToTextRecognizer

To Start

|

void start(){ } |

To Stop

| void stop() { _speech.stop().then((result) { setState(() { _isListening = result; }); }); } |

Complete code for the Example

main.dart

import 'package:flutter/material.dart';

import 'package:permission_handler/permission_handler.dart';

import 'package:speech_recognition/speech_recognition.dart';

import 'listsearch.dart';

void main() => runApp(MyApp());

class MyApp extends StatelessWidget {

GlobalKey_scaffoldState=GlobalKey();

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Flutter Demo',

theme: ThemeData(

primarySwatch: Colors.pink,

),

home: Scaffold(

key: _scaffoldState,

appBar: AppBar(title: Text("Voice Recognizer"),),

body: Container(

child: Center(

child: Column(

mainAxisSize: MainAxisSize.min,

children: [

RaisedButton(onPressed: (){

Navigator.push(_scaffoldState.currentContext, MaterialPageRoute(builder: (context) {

return SpeachRecognize();

}));

},child: Text("Simple Speech",style: TextStyle(color: Colors.white,fontSize: 20),),color: Colors.pink,),

RaisedButton(onPressed: (){

Navigator.push(_scaffoldState.currentContext, MaterialPageRoute(builder: (context){

return ListSearch();

}));

},child: Text("Voice Search",style: TextStyle(color: Colors.white,fontSize: 20),),color: Colors.pink,)

],

),

),

),

),

);

}

}

class SpeachRecognize extends StatefulWidget {

@override

_SpeachRecognizeState createState() => _SpeachRecognizeState();

}

class _SpeachRecognizeState extends State{

SpeechRecognition _speech;

bool _speechRecognitionAvailable = false;

bool _isListening = false;

String transcription = '';

@override

void initState() {

// TODO: implement initState

super.initState();

activateSpeechRecognizer();

}

@override

Widget build(BuildContext context) {

// TODO: implement build

return MaterialApp(

theme: ThemeData(

primaryColor: Colors.pink

),

home: Scaffold(

appBar: AppBar(

title: Text('Speach To Text'),

centerTitle: true,

actions: [

_buildVoiceInput(

onPressed: _speechRecognitionAvailable && !_isListening

? () => start()

: () => stop(),

label: _isListening ? 'Listening...' : '',

),

],

),

body: Center(

child: Text((transcription.isEmpty)?"Speak to Record":"Your text is \n\n$transcription",textAlign: TextAlign.center,style: TextStyle(color: Colors.pink,fontSize: 20),),

),

),

);

}

Widget _buildVoiceInput({String label, VoidCallback onPressed}){

return Padding(

padding: const EdgeInsets.all(12.0),

child: Row(

children: [

FlatButton(

child: Text(

label,

style: const TextStyle(color: Colors.white),

),

),

IconButton(

icon: Icon(Icons.mic),

onPressed: onPressed,

),

],

));

}

void activateSpeechRecognizer() {

requestPermission();

_speech = new SpeechRecognition();

_speech.setAvailabilityHandler((result){

setState(() {

_speechRecognitionAvailable = result;

});

});

_speech.setCurrentLocaleHandler(onCurrentLocale);

_speech.setRecognitionStartedHandler(onRecognitionStarted);

_speech.setRecognitionResultHandler(onRecognitionResult);

_speech.setRecognitionCompleteHandler(onRecognitionComplete);

_speech

.activate()

.then((res) => setState(() => _speechRecognitionAvailable = res));

}

void start(){

_speech

.listen(locale: 'en_US')

.then((result) {

print('Started listening => result $result');

}

);

}

void cancel() {

_speech.cancel().then((result) {

setState(() {

_isListening = result;

}

);

});

}

void stop() {

_speech.stop().then((result) {

setState(() {

_isListening = result;

});

});

}

void onSpeechAvailability(bool result) =>

setState(() => _speechRecognitionAvailable = result);

void onCurrentLocale(String locale) =>

setState(() => print("current locale: $locale"));

void onRecognitionStarted() => setState(() => _isListening = true);

void onRecognitionResult(String text) {

setState(() {

transcription = text;

//stop(); //stop listening now

});

}

void onRecognitionComplete() => setState(() => _isListening = false);

void requestPermission() async {

PermissionStatus permission = await PermissionHandler()

.checkPermissionStatus(PermissionGroup.microphone);

if (permission != PermissionStatus.granted) {

await PermissionHandler()

.requestPermissions([PermissionGroup.microphone]);

}

}

}

|

ListSearch

import 'package:flutter/material.dart';

import 'package:permission_handler/permission_handler.dart';

import 'package:speech_recognition/speech_recognition.dart';

class ListSearch extends StatefulWidget{

@override

State createState() {

// TODO: implement createState

return ListSearchState();

}

}

class ListSearchState extends State{

List_listWidgets=List();

List_listSearchWidgets=List();

SpeechRecognition _speech;

bool _speechRecognitionAvailable = false;

bool _isListening = false;

bool _isSearch = false;

String transcription = '';

@override

void initState() {

// TODO: implement initState

super.initState();

_listWidgets.add("Text");

_listWidgets.add("RaisedButton");

_listWidgets.add("FlatButton");

_listWidgets.add("Container");

_listWidgets.add("Center");

_listWidgets.add("Padding");

_listWidgets.add("AppBar");

_listWidgets.add("Scaffold");

_listWidgets.add("MaterialApp");

_listSearchWidgets.addAll(_listWidgets);

activateSpeechRecognizer();

}

@override

Widget build(BuildContext context) {

// TODO: implement build

return MaterialApp(

theme: ThemeData(

primaryColor: Colors.pink

),

home: Scaffold(

appBar: AppBar(

title: Text('Search By Voice'),

centerTitle: true,

actions: [

_buildVoiceInput(

onPressed: _speechRecognitionAvailable && !_isListening

? () => start()

: () => stop(),

label: _isListening ? 'Listening...' : '',

),

],

),

body: ListView.builder(

itemCount: _listSearchWidgets.length,

itemBuilder: (ctx,pos){

return Padding(

padding: const EdgeInsets.all(8.0),

child: Card(

child: ListTile(

leading: Icon(Icons.description,color: Colors.pink,),

title: Text("${_listSearchWidgets[pos]}"),

),

),

);

}),

),

);

}

Widget _buildVoiceInput({String label, VoidCallback onPressed}){

return Padding(

padding: const EdgeInsets.all(12.0),

child: Row(

children: [

FlatButton(

child: Text(

label,

style: const TextStyle(color: Colors.white),

),

),

IconButton(

icon: Icon(Icons.mic),

onPressed: onPressed,

),

(_isSearch)?IconButton(

icon: Icon(Icons.clear),

onPressed: (){

setState(() {

_isSearch=false;

_listSearchWidgets.clear();

_listSearchWidgets.addAll(_listWidgets);

});

},

):Text(""),

],

));

}

void activateSpeechRecognizer() {

requestPermission();

_speech = new SpeechRecognition();

_speech.setAvailabilityHandler((result){

setState(() {

_speechRecognitionAvailable = result;

});

});

_speech.setCurrentLocaleHandler(onCurrentLocale);

_speech.setRecognitionStartedHandler(onRecognitionStarted);

_speech.setRecognitionResultHandler(onRecognitionResult);

_speech.setRecognitionCompleteHandler(onRecognitionComplete);

_speech

.activate()

.then((res) => setState(() => _speechRecognitionAvailable = res));

}

void start(){

_isSearch=true;

_speech

.listen(locale: 'en_US')

.then((result) {

print('Started listening => result $result');

}

);

}

void cancel() {

_speech.cancel().then((result) {

setState(() {

_isListening = result;

}

);

});

}

void stop() {

_speech.stop().then((result) {

setState(() {

_isListening = result;

});

});

}

void onSpeechAvailability(bool result) =>

setState(() => _speechRecognitionAvailable = result);

void onCurrentLocale(String locale) =>

setState(() => print("current locale: $locale"));

void onRecognitionStarted() => setState(() => _isListening = true);

void onRecognitionResult(String text) {

setState(() {

transcription = text;

_listSearchWidgets.clear();

for(String k in _listWidgets)

{

if(k.toUpperCase().contains(text.toUpperCase()))

_listSearchWidgets.add(k);

}

//stop(); //stop listening now

});

}

void onRecognitionComplete() => setState(() => _isListening = false);

void requestPermission() async {

PermissionStatus permission = await PermissionHandler()

.checkPermissionStatus(PermissionGroup.microphone);

if (permission != PermissionStatus.granted) {

await PermissionHandler()

.requestPermissions([PermissionGroup.microphone]);

}

}

}

|