Flutter Face Recognition - Face Detection using Firebase ML Kit

Learn how Firebase ML Kit detects faces in images by using algorithms that return rectangular bounding boxes for easy visualization - RRTutors.

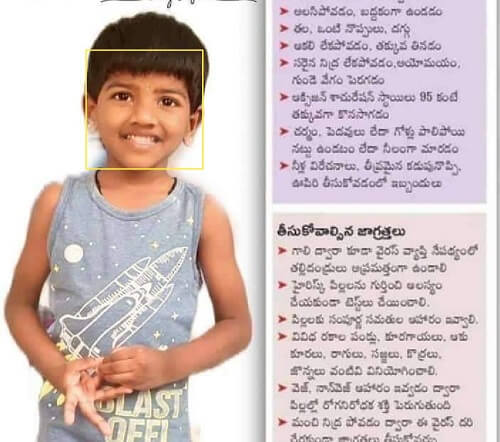

In this flutter programming example we will learn how to implement face detection in flutter application. To work with face detection in flutter we will use Firebase ML Kit. This example application we will detect human faces in an image.

|

Application Use Cases

This Firebase ML Kit enables us to detect face in an image file. This MK Kit algorithm will returns rectangular bounding boxes that we can then plot on the detected face.

So let's get started face detection application

Step 1: Create a flutter application in Android or any other IDE

Step 2: To work with Firebase ML Kit we need to connect our flutter application with firebase, read Firebase Integrate in flutter application which we covered in our previous article.

Step 3: Add ML kit dependencies in pubspec.yaml file

dependencies:

flutter:

sdk: flutter

firebase_ml_vision: ^0.9.10

image_picker: ^0.6.7+17

firebase_core: ^0.5.3

|

Step 4: Initialize firebase instance

To initiate Firebase Instance we need to add ML Kit meta info in Android manifest file

<meta-data

android:name="com.google.mlkit.vision.DEPENDENCIES"

android:value="face" />

|

Step 5: Write for code to face detection. Here we are picking the image from galerry and applying the face recognition algorithm on the selected image. The ML Kit algorithm will returns the rectangular box and plots in the detected face area on the image.

import 'package:flutter/material.dart';

import 'package:firebase_ml_vision/firebase_ml_vision.dart';

import 'package:image_picker/image_picker.dart';

import 'dart:io';

import 'dart:ui' as ui;

void main() => runApp(

MaterialApp(

debugShowCheckedModeBanner: false,

home: MyApp(),

),

);

class MyApp extends StatefulWidget {

@override

_MyAppState createState() => _MyAppState();

}

class _MyAppState extends State<MyApp> {

File _imageFile;

List<Face> _faces;

bool isLoading = false;

ui.Image _image;

final picker = ImagePicker();

@override

Widget build(BuildContext context) {

return Scaffold(

floatingActionButton: FloatingActionButton(

onPressed: _getImage,

child: Icon(Icons.add_a_photo),

),

body: isLoading

? Center(child: CircularProgressIndicator())

: (_imageFile == null)

? Center(child: Text('no image selected'))

: Center(

child: FittedBox(

child: SizedBox(

width: _image.width.toDouble(),

height: _image.height.toDouble(),

child: CustomPaint(

painter: FacePainter(_image, _faces),

),

),

)));

}

_getImage() async {

final imageFile = await picker.getImage(source: ImageSource.gallery);

setState(() {

isLoading = true;

});

final image = FirebaseVisionImage.fromFile(File(imageFile.path));

final faceDetector = FirebaseVision.instance.faceDetector();

List<Face> faces = await faceDetector.processImage(image);

if (mounted) {

setState(() {

_imageFile = File(imageFile.path);

_faces = faces;

_loadImage(File(imageFile.path));

});

}

}

_loadImage(File file) async {

final data = await file.readAsBytes();

await decodeImageFromList(data).then((value) => setState(() {

_image = value;

isLoading = false;

}));

}

}

class FacePainter extends CustomPainter {

final ui.Image image;

final List<Face> faces;

final List<Rect> rects = [];

FacePainter(this.image, this.faces) {

for (var i = 0; i < faces.length; i++) {

rects.add(faces[i].boundingBox);

}

}

@override

void paint(ui.Canvas canvas, ui.Size size) {

final Paint paint = Paint()

..style = PaintingStyle.stroke

..strokeWidth = 2.0

..color = Colors.yellow;

canvas.drawImage(image, Offset.zero, Paint());

for (var i = 0; i < faces.length; i++) {

canvas.drawRect(rects[i], paint);

}

}

@override

bool shouldRepaint(FacePainter old) {

return image != old.image || faces != old.faces;

}

}

|

Step 6: Run the application